The elections for the board of the OpenStreetMap Foundations are upcoming and the most interesting part of this for the OSMF members has traditionally started with the publications of the self-presentations of the candidates, which happened during the last days.

My impression after the first reading of these is that the OSMF seems to have arrived in the post-truth era. Some might claim this has already happened years ago, but to me – and i have been a keen observer of the OSMF for many years now – it became obvious with this year’s election.

The concept of post-truth does not mean the communication of lies or untrue statements, it refers to the trend of defactualization, where the distinction between false and true becomes meaningless in communication and discourse in a certain social context.

There is an euphemism for this style of defactualized presentation commonly used in corporate PR and marketing these days, that is storytelling. The aim here is to create a certain impression or emotional reaction from the recipient of the communication largely sidestepping the facts surrounding the topic. Or in a nutshell: If the story is compelling it does not matter if it is convincing.

The irony is that originally, the term storytelling referred to something rather different: The development and communication of fictional stories either for the purpose of transporting abstract philosophical ideas and moral values in a practically robust fashion in a culture without writing or for the purpose of communicating ideas and thoughts that are subject to social taboos or otherwise not socially accepted in a non-fictionalized form.

My suggestion to the OSMF members for this year’s board election is therefore: Read the self-presentations of the candidates as fictional stories – like fairytales, mythology etc. And do not regard what you read there as propositions in the philosophical sense. If you do so i think you will be able to derive much more of value from your reading. If that is then helpful for your voting decision is a different matter of course. But you will definitely spare yourself some of the pain i had in my first reading of these.

I had considered analyzing this year’s board candidates’ self-presentations for examples of post-truth era communication techniques. But that does not seem right to me. After all it is not the candidates that are to primarily blame here. It is the OSMF members who are – collectively – too passive to set a higher bar for the candidates they elect for the board. But more than that: I also have the impression that quite a few OSMF members have fully accepted the end of history and simply can’t imagine a different kind of candidate. To use an old metaphor: They drink the sand because they don’t know the difference.

For those i will try here – in the tradition of storytelling in the original sense – to tell the story of a fictional board candidate who stands in contrast with the largely defactualized self-presentations we are seeing in this year’s election.

Manifesto

Dear OSMF members,

i am not actually a candidate in this year’s OSMF board election. Nor am i actually a real person that exists outside the mind of my creator, the author of this blog post, and the minds of you, esteemed readers of these lines. None the less i hope that my fictional candidacy in this year’s election inspires you to imagine the idea that some actual candidates might articulate and pursue some of the thoughts i present here in my fictional candidacy with the same clarity and determination as i do.

Feel free to customize the image that my creator has produced in these lines to your cultural or personal preferences and expectations.

Motivation and Objectives

What i hope to accomplish during my time on the OSMF board i am going to outline in the answers to the questions provided. The reason why i make these things my objectives is because, after years of observation and contemplation, i have come to the conclusion that these are crucial for a sustainable development of the OSMF under the goal of supporting the OpenStreetMap project. I am aware that the practical pursuit of many of these objectives is likely going to see opposition from substantial economic interests around the OpenStreetMap project. If i succeed in pursuit of these will depend on your support and that of the rest of the OSM community. I am going to do my best to accomplish these objectives within the OSMF board, but especially in the long term i will only be successful in that if i have active support for that from the OSMF members and the OSM community.

Conflict of Interest Management

First of all i reject the premise of the question that Conflicts of Interest are something that can be universally managed or mitigated without actually addressing the Conflict of Interest itself.

My personal situation allows me more than many others to pursue the interests of the OSM community even if they are in conflict with weighty economic interests around the project. I am not rich by any measure but my livelihood, my professional career and my personal goals are out of reach for even the most influential financiers of the OSMF or of economic interests around OpenStreetMap. So, even if those would want to put pressure on me personally to put their interests above those of the OSM community, the possibilities for that are very limited.

That being said there are, of course, going to be situations where i am going to have Conflicts of Interest in my work as a board member. I hope that i am going to be able to identify such situations myself in most cases. But i am aware that i cannot expect this to work reliably in all cases, so i will need to resort to other mechanisms as backups, which i will discuss in a second. Before that i am going to outline the three measures i expect to consider when i have become aware of a Conflict of Interest as a board member:

- Selectively removing myself from the part of my work as a board member where i have the conflict. This only works in cases when the work in question, as well as the Conflict of Interest, are tightly limited in scope. In case of any kind of decision making, removing myself would mean both from the actual formal decision and the complete deliberation process leading up to the decision.

- Temporarily suspending my work as a board member altogether. This only works in cases where the Conflict of Interest in question is inherently limited in duration.

- Resigning my position as board member. This is always the ultimate fallback option in cases of Conflicts of Interest where no other measure can reliably ensure that the secondary interest does not affect my work as a board member.

All of these three options are going to be on the table whenever i become aware of a Conflict of Interest as a board member. I am going to transparently and publicly document the reasoning behind my decision of what measure to choose in any such case, so that the OSMF members have the opportunity to check on that reasoning and – if necessary – take the appropriate steps to insist on a different option.

Now back to the problem of reliably identifying Conflicts of Interest. The history of the OSMF board has shown beyond doubt that self-identification does not reliably work and even cross-checking among the board members does not, because the other board members are either equally unable to see the conflict or feel socially inhibited to openly point out the conflict of another board member (see here, here and here.

Therefore, other mandatory mechanisms will need to be introduced and this is going to be one of the things i am going to pursue as a board member. Transparency in decision making processes is going to be a key element of that (see next question). Beyond that, i concretely envision to suggest the following measures to the OSMF membership as binding to the board in their work:

- Creating a mechanism by which decisions in which a Conflict of Interest has been overlooked are automatically nullified.

- Introducing mandatory formal reporting of any Conflict of Interest identified as such by a board member to the OSMF membership.

- Creating a mechanism by which Conflicts of Interest can be reported anonymously by any OSMF member.

- Creating an oversight mechanism (ethics council) formed by the local chapters that oversees the board’s work with regards to Conflicts of Interest and that reports their findings to the OSMF membership as an additional control mechanism.

Transparency and Accountability

Transparency and Auditability of the work of the OSMF have seen a massive decline during the past years relative to the state after the transparency initiative of the 2015 board:

- While originally all of the board meetings were public, the meetings are now split into private mid month meetings were actual deliberation of decisions seems to take place and the public meetings where formal decisions are still made but in depth discussion does not typically take place any more.

- Policy document drafts are now routinely published only in the last minute before the formal decision (either during the meeting or in the day before the meeting) depriving the OSMF members from the possibility to review them and provide input before the decision. This communicates substantial disrespect from the board for the OSMF membership.

- While some years ago the OSMF board routinely discussed policy development with the OSMF membership at an early stage (both on the board and on members’ initiative) this has almost completely stopped. And if the membership is consulted at all, it is usually about approval of a final draft as a done deal after the main deliberation has already happened in private.

- The board does not seem to be bound by their own policy – for example the commitment to open channels is rather loosely interpreted – see here.

- The board’s main method of internal communication and deliberation seems to have moved from channels with a permanent record (email) to volatile channels (IRC/Matrix) preventing both independent auditing of the board’s decision making and the board’s own ability to look up the genesis of past decisions.

This is a highly problematic trend on multiple fronts – in terms of oversight and dealing with Conflicts of Interest (see previous question), in terms of recruitment of competent volunteers (if no information is visible from the outside that massively steepens the learning curve for everyone who wants to get involved because they can only access pertinent information after they have become part of the inner circle) and in terms of public communication (transparency by default spares a lot of work in explicitly and selectively communicating only what is meant to be known to the public). Also the compartmentalized information management in the current OSMF stands in sharp contrast with the way the OSM community communicates otherwise, which creates a substantial culture gap between the two.

As a board member i am going to

- make sure the OSMF board moves back to channels with a permanent immutable record for internal deliberation by refusing to participate in non-public inner-board communication on channels without such record. The need to be able to look up and refer to past communication in deliberation of the board is so obvious that i feel forcing the hand of the rest of the board this way is warranted.

- start publicly reporting on developments in the board as soon as they happen even if they would otherwise not be known to the public at that time. I am not going to disclose content of communication without agreement in the board of doing so, but i believe that the OSMF membership and the OSM community as a whole – who the board is meant to serve – have the right to know for example when the board starts to draft policy affecting the OSM community or starts discussing to contract someone to do certain work for the OSMF and i don’t think, as an elected board member, i would be infringing on anyone’s moral or legal rights by reporting in a timely fashion about such things happening as they do.

- present and argue for the advantages of a public-by-default approach to board work with the other board members and attempt to roll back the roll back of the transparency of board work of recent years. I am aware that this might be an uphill battle against an existing organizational culture of compartmentalization. But the benefits of a more open work culture are difficult to ignore.

Strategic Vision and Sustainability

The strategic planning initiated by Allan Mustard in the OSMF was a significant step and it could have been a good starting point for further work in that direction despite some shortcomings. But what the board then made out of this ambitious start, by essentially removing all the clarity and stringency in the original document and replacing it with soft and vague expressions of wishes, amounted to nothing less than moving back to the muddling through the plan originally aimed to overcome.

As a board member i would aim to re-initiate the strategic planning process, starting with the original work of Allan Mustard. I would aim to

- Fill the thematic gaps and omissions in the original plan.

- Move the discussion of further development to a public venue, giving all interested community members the opportunity to read and provide feedback and inviting people with experience in specific fields to provide advice (in public to provide checks and balances against lobbying for special interests).

But i would in particular aim to introduce a strict subsidiarity principle into the strategic planning of the Foundation. The OSMF should not pursue tasks that can be equally or better handled on a local level or by thematically more specialized organizations. The OSMF needs to focus itself on its core functions as outlined by the OSMF mission. This is more than enough of a challenge with the continued growth of the project.

I also consider a move of the OSMF to the EU a strategic necessity, as well as a chance to restructure the organization in a more robust and more scalable way. The necessity stems from the legal protection of the OSM database under the ODbL, which is based on European database rights. And the move would provide the chance to design a new organization with proper formalized checks and balances, because it would not be subject to the tight constraints of UK corporate law. I envision a structure where the operational management of the organization and policy development are separated and where the local chapters have a formal role of oversight over key aspects of the organization (like decisions about the rights to the OSM database, trademarks and the mapper accounts).

The steps taken by the OSMF board so far regarding a move to the EU seem to suffer from a lack of transparency in decision making. In particular the choice of countries to consider moving to seems to be based on behind the scenes lobbying of special interests, rather than an open and transparent selection process. And it also suffers from the lack of ambition with regards to restructuring the organization. As is it seems likely that the board would try to copy the highly disadvantageous structure of the existing OSMF as required by UK corporate law into the EU and this way miss a huge opportunity that is likely never to come again. Bottom line: I think the whole process needs a restart with a more transparent process and a broader discussion how the new organization should be structured.

Decision-Making and Collaboration

I do not plan to collaborate with anyone during my time on the board, but I hope to cooperate successfully with my fellow board members, with the working groups, with the OSMF membership and with the OSM community as a whole. The key to successful cooperation is a basis of shared goals and values to start from. In the case of OpenStreetMap, this cannot be shared cultural values but has to be the basic ideas and values of cooperative collection of local geographic knowledge. Everything else (like the traditional OSMF mission and its interpretation) needs to derive from that. If there are disagreements in the OSMF about how to practically pursue the OSMF mission, i would try to solve that through arguments and reasoning with regards to the basic ideas and values of OpenStreetMap. In other words: I would ask others to try to convince me that what they suggest is in support of these and i would try to convince them that what i suggest is beneficial in that regard.

As far as the organizational structures within the OSMF are concerned – i think the original idea of largely independent working groups is very good. But the board has, in recent years, substantially crippled their development by frequently interfering ad hoc with their independence and by creating board committees with non-board members as a competing, less independent but much more favorably treated organizational structure. This whole concept needs to be re-considered together with reducing the scope of the OSMF’s own activities in favor of the local chapters.

What would definitely end with me on the board is the current practice of keeping inner-board conflicts and disagreements under cover and the attempts to hide such from the membership. This is not a good way of dealing with disagreement and such pretense damages the credibility of the board as a whole to the outside.

Fundraising and Resource Development

According to the self-presentation of the current OSMF in public, fundraising seems to increasingly turn into a goal on its own. That is not a good development. The expenses of the OSMF have quite significantly increased over the past years but the level of actual internal organization of the Foundation has not kept up with that. And that is not a problem caused by the lack of money, it is caused by the lack of determination in ending the muddling through that Allan Mustard has rightfully criticized in his sketch for a strategic plan. If the OSMF would try to address this by raising and spending additional money (or in other words: try to outgrow the problems through financial expansion) the result would be an organizational automaton the de-facto primary goal of which would be to sustain itself and grow as an organization, meaning raising funds for the main purpose of continuing to raise funds.

As a board member i would aim to follow a different approach. I would try to consolidate or even reduce (based on the subsidiarity principle, by ending the OSMFs involvement in activities that are outside its core functions) the current operational scope of the organization and focus on putting that scope on a more solid and robust basis. That involves in particular

- developing, consolidating and publishing internal policy that is binding for everyone regarding the routine operational activities of the organization. In other words: Writing a comprehensive OSMF handbook covering the current operational scope of the organization.

- detailing the strategic planning to the level that it provides clear guidance to all the operational activities.

- opening up to the wider OSM community in operational activities and strategic planning and making supporting the OSMF through volunteer work attractive again for intrinsically motivated grassroots volunteers without a professional motive.

Of course, even with this approach followed with determination, the OSMF will need continuous fundraising to sustain itself. The goal here needs to be IMO to better diversify the funding. I would try to establish the following goals:

- No more than 10% of the OSMF’s income should at any point of time come from a single source (and indirect funding via proxy would need to be considered here of course)

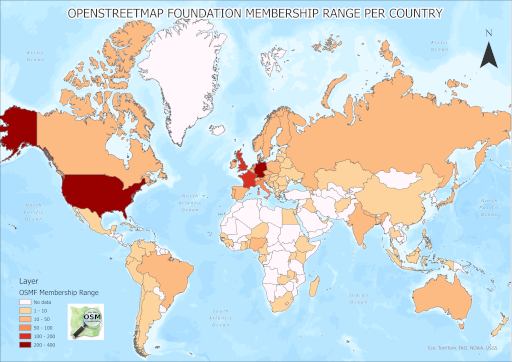

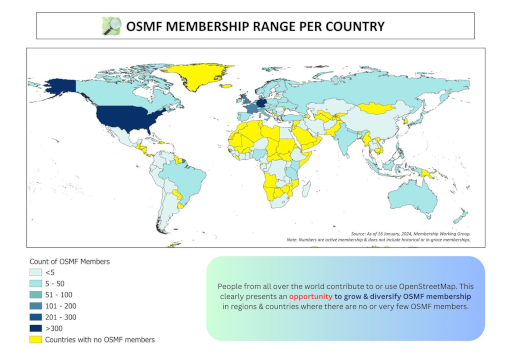

- No more than 30% of the OSMF’s income should at any point of time come from a single country (again with indirect funding through dependent organizations in other coutries considered)

- No more than 30% of the OSMF’s income should at any point of time come from a single thematic class of financiers (currently for example digital technology companies could be a class where this is potentially an issue)

Accomplishing these will likely require cooperation with local chapters around the world. The OSMF therefore needs to find a sustainable way to handle the competitive situation with the local chapters with regards to funding. The idea currently pursued to incentivize a funding dependency of the local chapters on the OSMF by trying to take a proxy and gatekeeper role between big corporate financiers and the local chapters is a very bad one in my opinion. The opposite approach would, in my opinion, be better with the local chapters being the direct contact with local financiers of OpenStreetMap and handling the local financial bureaucracy. This would also conform with the subsidiarity principle i dicussed above, while the current approach does not.

Handling Legal and Political Challenges

A thorough assessment of legal risks faced by the OSMF is quite clearly long overdue. This should be combined with the development of mitigation strategies that avoid the possibility of core assets of the OSMF (the database rights, trademarks and mapper database) to be at risk in case of serious legal challenges. Ideas for that can be implemented in the course of a move to the EU as sketched above.

On the political front, the OSMF still has a lot to do to repair the damage incurred by the infamous Crimea decision in 2018 (where the board overruled standing OSMF policy, community consensus and the data working group in an attempt to pacify some loud voices articulated in the OSM community and political pressure). The board needs to actively reaffirm standing behind the core principles of OpenStreetMap in documenting the observable reality on the ground – even in cases where this is politically not fashionable. A consistent stance based on clear and broadly supported principles in the long term is a much better defensible position than opportunistic adjustment to the wind direction of the day.

State of the Map

The operational planning of the State of the Map conference should remain at the discretion of the SotM working group and i would, as board member, oppose any attempt of ad hoc interference with that as it happened in the past. Strategically, i would encourage the SotM working group to develop the concept of the State of the Map conference in the following directions:

- supporting predominantly regional conferences in a rotating fashion rather than moving an international conference around the world. This would IMO be more suitable to celebrate the cultural diversity of the OSM community world wide.

- developing concepts of a decentralized conference with different local meetings of people connecting digitally to the event.

- developing asynchronous formats where people can watch presentations at a daytime of their chosing and asynchronous discussion afterwards takes place between presenter and audience.

And to be clear: The OSMF cannot and should not aim to ensure that the conference is safe and accessible for all members of the global community. That idea would be completely impractical if global community really means global community – and it would be morally highly questionable if global community means only the wealthy international OSM jet set. The OSMF should be open to support an international conference in any part of the world where there is an active local OSM community willing and able to organize such an event. If, in some years, that happens to be in places which most people from Europe and North America practically won’t want to or are not able to visit, that is to be accepted.

Your Community Contributions

I have contributed to OpenStreetMap on various levels – in mapping, in tagging discussions, in software development, in map design and in public communication to name the most important. But i have no strong focus on any of these fields in particular that would put me at risk of aiming to cater the specific interests of that field.

I have also followed the development of the OpenStreetMap Foundation for many years as far as this is possible from the outside as a normal member who is not part of the inner circles. And i have frequently talked to board members during that time about the OSMF. But i have never been involved in the OSMF myself beyond being a member – hence it is safe to say i have never been socialized in the organizational culture of the OSMF. This gives me a relatively well informed outside perspective.

I am not a native English speaker and besides my native language and English i also have basic knowledge of another language with wider use across different countries (like French, Spanish, Russian, Arabic, Chinese – pick whatever you like most). This helps me realize that by limiting your horizon to English language only (like the OSMF mostly does at the moment) you miss out on significant cultural diversity and loose immense opportunities. This is why the OSMF urgently needs to start embracing more diversity in languages in its organization. That is a challenge practically, but IMO also a strategic necessity.

Promoting Community and Attracting Volunteers

Recruitment of qualified and intrinsically motivated volunteers has become a big problem for the OSMF during the past years. The OSMF has alienated a lot of highly qualified long term volunteers from the OSM community over the past years through the principle of people whose work we know and enjoy as a recruitment paradigm for both paid and unpaid work in the OSMF assigned by the board. This not only urgently needs to end, the OSMF board needs to take substantial steps to win back credibility with the larger OSM community where the do-ocratic principle is highly valued.

The OSMF also has a lot of homework to do with regards to inclusivity. Getting involved in the OSMF without substantial English language communication ability and without embracing the OSMF organizational work culture is next to impossible. And people usually don’t even have the chance to get a good idea of these requirements before actually taking the step to get formally involved because most of the OSMFs activities happen behind closed doors and are only accessible to those who have decided to get formally involved. That is literally the opposite of being welcoming.

Due to the independence of the Working Groups (which is important to hold up) the board has only limited ability to directly top-down change this situation. But we can lead by example and provide helpful recommendations and organizational support for the working groups to reduce the barrier to get involved as volunteers.

There are a number of concrete steps i would like to pursue in that regard as board member.

- Set up a place where work for the OSMF that can be independently pursued by volunteers is openly advertised for community members to take on without a formal barrier. This of course will require more real time transparency in the work of the OSMF in general (so people understand the context of these tasks). Initially that would be for tasks created by the board but the idea would be that this approach could also be adopted by the working groups.

- Move more active work from the OSMF website (with top-down control of editing rights) and elsewhere to the OSM wiki (where all OSM community members can get actively involved)

- Being more transparent in all practical board work as this reduces the barrier and increases the incentive to get involved.

- When consulting the larger community in a formal or informal way specifically inviting and valuing critical and inconvenient comments and reactions.

The real challenge is going to be breaking the English language dominance. I have no definitive approach how to tackle this at this time. But not accepting the currently dominant paradigm that the English language dominance is inevitable is a start. Revising the so called diversity statement in that matter would be a symbolic first step.

Technology and Innovation

OpenStreetMap relies heavily on technology but is not a technology project itself. That is important to keep in mind for the OSMF. So technology for the OSMF always needs to be a means to the end of supporting the cooperative mapping efforts of the OSM community, not a goal on its own.

The OSMF needs to keep a close eye on the technologies OpenStreetMap relies on in its core functions and their development. But in doing so we also need to keep in mind the basic principle to support, but not to control the project. It is highly doubtful if the OSMF taking over the development of some core tool in operation of OpenStreetMap by paying a professional developer would be of long term benefit for the project. OpenStreetMap has, over its history, so far been able to attract and motivate the developers of the technology it relies on on the operational level and the OSMF’s role here should be to ensure it continues to do so, not to become a substitute motivator.

Beyond these fundamental operational needs lies the field of strategic investment into technologies needed in the long term by the OSM community. The focus of the OSMF board here should lie in supervising and guiding the strategic planning in that regard to provide the necessary guidance for the Engineering Working Group in practical implementation of these strategic investments.

Since strategic investments in technology typically have a significant size, there is going to be a necessity for the OSMF to pursue cooperation with other organizations – like OSGeo, or academic institutions as far as fundamental technological innovations are concerned, or other potential users with overlap in strategic needs.

My own first focus as a board member in this domain would be to keep a careful eye on how technological decisions affect the OSM community on the social level. The important thing here is that technology should have the function to support the OSM community in their needs and their consensus decisions. The technology should not be used to steer the OSM community in a certain direction and it is the board’s task to ensure that this does not happen under the aegis of the OSMF.

My second focus would be to ensure that the decision of where to strategically invest the limited means of the OSMF is not primarily guided by lobbying interests of parties who want to profit from the investment, but by a careful and neutral look at the actual strategic needs of the OSM community for its work. Doing the strategic planning in that regard publicly is going to be key to accomplish that.

Data Quality and Protection

We have here the two fairly separate fields of vandalism (i.e. malicious actions) and quality assurance (i.e. the concern about the quality of well intended edits of the data). The first field is handled on the operational level by the working groups, the board should not get involved here. It would be important to give this point substantial consideration in the strategic planning and to involve the concerned working groups in strategy development there.

Regarding quality assurance – this is primarily a matter for the mapper community and the OSMF should not get involved here beyond this potentially being part of the strategic investment in technology (see previous section). One exception: If quality issues are directly or indirectly caused by organized mapping activities. Here adjustments of existing regulation or new regulation could be required in the future.

I see no acute need for adjustments in policy here, but the current organized editing rules have been in place for quite some time now without changes. So one thing i would do as a board member is to initiate a comprehensive review of the organized editing policy and evaluate the need for adjustments. Quite a bit of research has been published during the past years on organized editing in OSM so there is a substantial data basis for such a review. This data should be supplemented by direct input from local mappers regarding the effects of organized activities on their work.

Perspective on Open Source

The fundamental idea of OpenStreetMap is directly tied to the idea and the philosophy of open data, which in turn is closely related to the idea of free open source software and open technology in general. So, as far as the technology the OpenStreetMap Community relies on in its basic work is concerned, the need for this technology to be open is pretty much a given (although, to be accurate, that principle currently largely ends when it comes to hardware technology).

How the OSMF manages its membership database is fairly unrelated to that so you could argue the same principle does not apply here. But the OSMF has a FOSS policy and that derives not only from the philosophical connection between open data and free open source software, but from practical considerations as well. It is practically beneficial for the OSMF to use FOSS rather than proprietary software for multiple reasons

- it is less costly because you avoid license costs.

- it avoids creating a dependency on a proprietary software provider.

- it has security benefits since you don’t need to run code that you can’t inspect (in particular relevant for use cases involving sensitive personal data).

- it massively lowers the barrier for volunteer involvement because volunteers can use the same software independently without a license cost hurdle.

- it creates an additional incentive for volunteer involvement because volunteers can get practice in using software that they can then also use otherwise without additional license costs.

In the specific case of the task of managing the OSMF membership database the following additional argument applies:

As far as i understand it the incident that triggered the recent discussion was caused by a change in the membership signup process being deployed without proper testing – which would have revealed the problem. Such testing is much easier to organize in a volunteer based project if no proprietary software is involved because any volunteer could perform the testing on their own infrastructure independently while with proprietary software licenses the testing would either need to happen on OSMF infrastructure or the tester would need to be contracted by the OSMF (depending on the terms of the license). In other words: FOSS solutions are much better compatible to the openly cooperative and decentralized work culture of the OSM community than proprietary solutions.

The bottom line is: I see plenty of arguments that speak for continuing to use FOSS solutions in managing the OSMF membership database and none that speak for a proprietary solution.

Perspective on Overture Maps

Overture Maps is essentially an OSM data user like anyone else. What distinguishes them from other data users is that their aim is to re-distribute OSM data in a modified form on a large scale – something that is rare among commercial OSM data users so far.

For the OSMF it is important to treat them like any other data users. That means watching over their compliance with the ODbL. Since they distribute combinations of OSM data with other data this in particular concerns the share-alike rules of the ODbL. These have been neglected in the guidelines regarding the ODbL developed by the OSMF so far and it might be advisable to fill that gap.

And we should communicate to them clearly and openly that we expect them to support the project the data of which they use extensively by the Linux foundation becoming a corporate member of the OSMF. Similarly, we should of course look into contacting large scale data users who use OSM data through Overture and equally suggest them to support OpenStreetMap as the original source of the data they use.

Conclusion

That ends the presentation of my fictional candidacy for the OSMF board election. If you like what you have read then don’t vote for me – because you can’t. Instead you should get out of your mapping armchair and start working towards what you like in what i presented above. The truth is that the OSMF will not change for the benefit of the OSM community unless the OSMF members push it with determination to do so. So if you find my analysis of the situation of the OSMF and the suggestions i derive from it convincing on any of the topics discussed, then it is up to you to pursue these ideas. Because no one else will.